Facebook, Microsoft target faster services with new AI server designs

IDG NEWS SERVICE: Facebook's Big Basin and Microsoft's reworked Project Olympus have more space for GPUs to accelerate machine learning.

Facebook on Wednesday rolled out some staggering statistics related to its social networks. Each day, users watch 100 million hours of video, 400 million people use Messenger, and more than 95 million photos and videos are posted on Instagram.

That puts a heavy load on Facebook's servers in data centers, which help orchestrate all these services to ensure timely responses. In addition, Facebook's servers use machine learning technologies to improve services, with one visible example being image recognition.

The story is similar for Microsoft, which is continually looking to balance the load on its servers. For example, Microsoft's data centers apply machine learning for natural language services like Cortana.

Both companies introduced new open-source hardware designs to ensure faster responses to such artificial intelligence services, and the designs will allow the companies to offer more services via their networks and software. The server designs were introduced at the Open Compute Project U.S. Summit on Wednesday.

These server designs can be used by other companies as a reference to design their own servers in-house and then send for mass manufacturing in Asia, something Facebook and Google have been doing for years. Financial organizations have also been experimenting with OCP designs to make servers for their organizations.

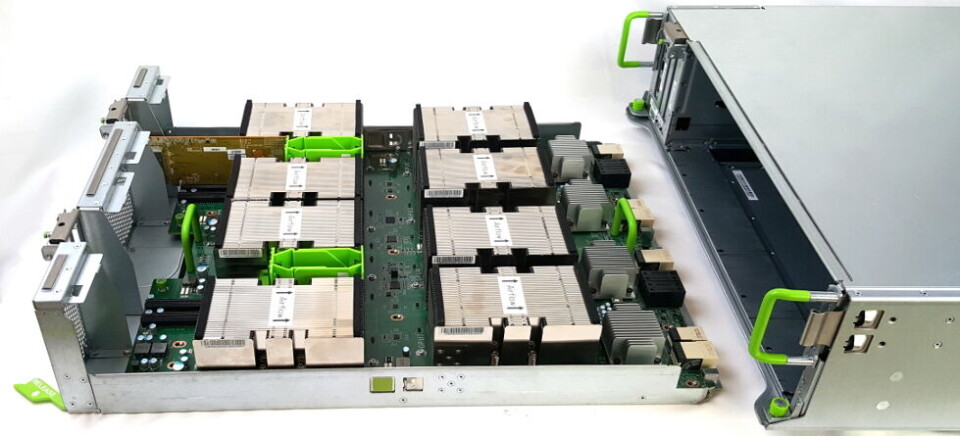

Facebook's Big Basin is an unorthodox server box that the company has termed "JBOG" -- for Just a Bunch Of GPUs -- that can deliver unprecedented power for machine learning. The system does not have a CPU and operates as an independent box that needs to be connected to discrete server and storage boxes.

Big Basin delivers on the promise of decoupling processing, storage, and networking units in data centers. In independent pools, storage and processing can be scaled up much faster but are limited when stuffed in one server box like today. The computation is also much faster when processing and storage are networked closer together. Decoupled units also share power and cooling resources, which reduces the electric bill in data centers.

The Big Basin system can be connected to Tioga Pass, a new Facebook open-source dual-CPU server design.

A decoupled data center design is important for companies like Facebook and Google, which are buying thousands of servers to meet with their growing processing needs. The companies can scale up web services and machine learning tasks much faster by decoupling storage, processing, and other resources.

Intel is also chasing a similar design with its Rack Scale architecture, and companies like Dell and Hewlett Packard Enterprise offer blueprints for such server implementations.

Facebook's Big Basin system has eight Nvidia Tesla P100 GPU accelerators, connected in a mesh architecture via the super-fast NVLink interconnect. The mesh interconnect is similar to one in Nvidia's DGX-1 server, which is used in an AI supercomputer from Fujitsu in Japan.

The other new AI server design came from Microsoft, which announced Project Olympus, which has more space for AI co-processors. Microsoft also announced a GPU accelerator with Nvidia and Ingrasys called HGX-1, which is similar to Facebook's Big Sur but can be scaled to link 32 GPUs together.

Project Olympus is a more conventional server design that doesn't require massive changes in server installations. It's a 1U rack server with the CPUs, GPUs, memory, storage, and networking all in one box.

Microsoft's new server design has a universal motherboard slot that will support the latest server chips, including Intel's Skylake and AMD's Naples. Project Olympus will do something rarely seen in servers: cross over from x86 to ARM with support for Qualcomm's Centriq 2400 or Cavium's Thunder X2 chips.

Qualcomm will be showing a motherboard and server based on the Project Olympus design at the OCP summit. The Qualcomm server will run Windows Server, the first time the OS is being shown running on an ARM chip.

The universal x86 and ARM motherboard support will allow customers to switch between chip architectures without purchasing new hardware. Bringing ARM support to Project Olympus is one of the big achievements of the new server design, Kushagra Vaid, general manager for Azure hardware infrastructure at Microsoft, said in a blog entry.

There's also space for Intel's FPGAs (field programmable gate arrays), which will speed up search and deep-learning applications in servers. Microsoft uses FPGAs to deliver faster Bing results. The server also has slots for up to three PCI-Express cards like GPUs, up to eight NVMe SSDs, ethernet, and DDR4 memory. It also has multiple fans, heatsinks, and multiple batteries to keep the server running in case of power loss.

The Project Olympus HGX-1 supports eight Nvidia Pascal GPUs via the NVLink interconnect technology. Four HGX-1 AI accelerators can be linked to create a massive machine learning cluster of 32 GPUs.

Today’s data centers are undergoing a massive shift to support the rapid adoption of AI computing, said Ian Buck, vice president and general manager of accelerated computing at Nvidia.

"The new OCP designs from Microsoft and Facebook show that hyperscale data centers need high-performance GPUs to support the enormous demands of AI computing," Buck said.